The Problem

Unpredictable GPU demand made scalable training hard to sustain.

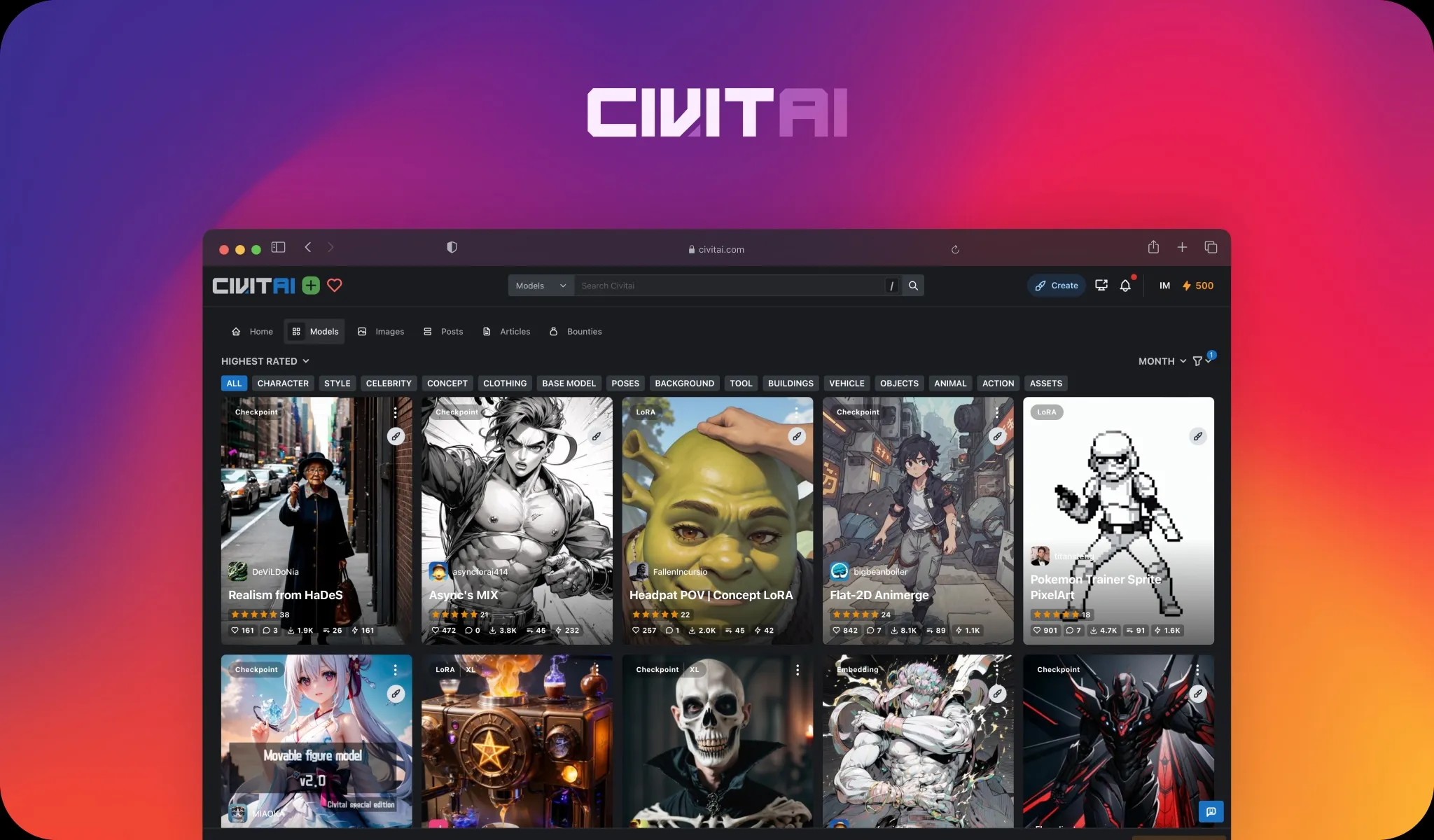

Civitai’s platform allows users to fine-tune their own models using Low-Rank Adaptation (LoRA)—a powerful method that customizes base diffusion models with lightweight training and minimal resources.

As the number of users and LoRA uploads exploded, so did the demand for GPU infrastructure to support training. But this workload wasn’t predictable. It spiked with new trends, community challenges, and viral moments. Supporting it meant Civitai needed:

- Flexible, scalable infrastructure that could handle bursts of demand

- Affordable GPU resources that kept training accessible to a broad, global user base

- Minimal cold start times to enable real-time, frictionless creativity

Traditional providers couldn’t meet these needs without compromising on cost or user experience. So Civitai turned to RunPod.

The Solution

RunPod enabled fast, cost-efficient training for millions of LoRAs.

Rather than powering inference or generation directly, RunPod became Civitai’s engine for training LoRAs at scale.

Civitai runs its LoRA training workloads on a combination of RunPod’s Secure Cloud and Community Cloud, giving users access to both dedicated and affordable GPU resources for model fine-tuning—without requiring their own hardware.

Every day, creators use RunPod-powered infrastructure behind the scenes to fine-tune new models, iterate on styles, and contribute to the open-source ecosystem. And it adds up—fast.

“Last month alone, we trained 868,069 unique LoRAs on your platform,” one of Civitai’s engineers shared.

Each of these LoRAs is previewed with three generated images, resulting in more than 2.6 million image generations on RunPod each month. These generations aren’t user-facing outputs—they’re training artifacts used for validation and previews.

While the full 50 million monthly image generations on Civitai are powered by separate infrastructure, many of those generations use LoRAs that were trained on RunPod. In this way, RunPod powers the creator input layer that fuels the rest of the ecosystem.

The Result

A thriving creator ecosystem powered by seamless training.

By offloading training infrastructure to RunPod, Civitai has been able to:

- Scale its LoRA training pipeline without cost overruns or bottlenecks

- Keep training accessible to non-experts, hobbyists, and pro users alike

- Accelerate time-to-creation, helping users turn ideas into models and publish them back into the community faster

This has helped reinforce Civitai’s identity not just as a hosting platform, but as a creative lab—where users don’t just download models, they shape them.

“RunPod helped us scale the part of our platform that drives creation. That’s what fuels the rest—image generation, sharing, remixing. It starts with training.”

Looking Ahead

Fueling the creative stack, not just the output.

Civitai’s team is focused on continuing to support creators—from infrastructure and training, to community competitions and content discovery.

Initiatives like Project Odyssey, the world’s largest AI film competition, reflect how the platform empowers experimentation far beyond still images. And none of it works without scalable, reliable infrastructure behind the scenes.

By partnering with RunPod, Civitai ensures that anyone can start with an idea, train a model, and create something new—whether or not they have a GPU of their own.

Because when the tools to train are open, the possibilities to generate become endless.